This week, I took the Certified Ethical Hacker exam – again… The first attempt at the exam didn’t make for a happy story. I was convinced that they couldn’t possibly have lost all of my work – the database must have stored each answer as I submitted it, surely?!

I went backwards and forwards with EC-Council to no avail. They offered an “upgrade” to an exam held at a test centre but that was a step backwards as far as I was concerned. Having assured me that they’d resolved the issue with the web app, I rescheduled the online exam. I’ll come back to the exam process later but I want to talk about certifications in general, why I was looking at CEH certification and how I prepared for it.

Certifications

I’ve been working in software development for a long time and I’ve never felt a definite need for certification. I’ve done a lot of recruitment over the years, I’ve looked at a lot of software developer CVs and certifications have always been a bonus feature and never a requirement.

Impostor syndrome seems to be very common amongst software developers and I count myself in that group. I have, from time to time, wondered if a certification would validate that I can actually develop software against a recognised standard, say Microsoft Certified Solution Developer.

Looking at the certifications available though, I’ve never been content that they were validating the right thing so I’ve focused on learning and continuous development, comparing notes with peers in the industry to benchmark myself.

So why did I even get into this process at all?

Why I started on the path to CEH

I’ve been into security for years – it was probably down to WarGames. The school computer lab was the location of a wargame of a different type where we’d pwn each other’s accounts, or the teacher’s. This was a more innocent time – a long time before the UK Computer Misuse Act!

Information security is intertwined with software development. The internet makes the software that we write accessible to users good and bad, and I’ve always been very conscious of that. One of the earliest bits of professional software that I wrote would hash the contents of a website so that unauthorised modifications could be detected.

Security doesn’t get enough attention from software developers though so I’ve been speaking on the subject for a couple of years to developer user groups. I gave a lightning talk on OWASP ZAP at .NET Oxford recently. Here’s a talk I did to London .NET at Skills Matter last year.

I’ve spoken at DDD Reading, DDD South West and I’m speaking about authentication at DDD East Anglia next month.

I’ve been to many events and user groups as an attendee and it’s fantastic to give something back as a speaker. I’ve been incredibly lucky to be chosen to speak at all of these events but I’d like to do more. Along the way though, I’ve felt that imposter syndrome creeping back in – why should these people spend their incredibly valuable time listening to me?

My job title has never been Security Consultant, Pen Tester or CISO but I’ve got the knowledge to share. Wouldn’t it be useful if I had a certificate to prove it!

Selecting the Certified Ethical Hacker certification was straightforward. I was familiar with that cert and the competing qualifications. CEH is broadly recognised, even approved for meeting US Department of Defense job requirements.

All I needed to do was pass the exam.

Preparation

With the knowledge in hand, I wondered if I really needed to spend £4,000 for an intensive 12 hour a day, 5-day course to get a certification. Sitting the exam, without the training, is just $750 US.

It would have been unwise to sit the exam with no preparation even with a broad knowledge of infosec. I needed to make sure I’d covered the syllabus. The key, for me, came from Pluralsight. They have a wide range of video-based training material and a CEH track from Troy Hunt and Dale Meredith.

I’ve followed Troy Hunt’s blog for years. I’ve been to his Hack Yourself First workshop. He’s a great public speaker. I didn’t know much about Dale Meredith but I do know the efforts that Pluralsight and their authors go to in producing content so I was happy he’d be able to deliver.

I work at ByBox and we encourage the team to use Pluralsight as part of their training. This means the Pluralsight subscription was ready to go.

There’s a further reason why video-based training was a good way to go for me and it’s an interesting one… cycling. Last year, I invested in a smart-trainer that turns a road bike into an interactive cycling game via Zwift. The photo below shows a similar setup to mine. This was great for improving my fitness over the winter but I found I could train and watch Pluralsight videos at the same time. Two types of training combined!

Handily, a Humble Bundle of security eBooks containing Certified Ethical Hacker Version 9 Study Guide came up so I added that to the training (but not on the bike). I knew some memorisation of tools and processes would be required. For example, I don’t spend every day using nmap and you’re expected to know all of the command line options.

At somewhere between 1.5x and 2x speed, I got through the 76 hour Pluralsight CEH track in around 40 hours. I had probably spent another 5 hours on extra learning. Was I ready?

The Exam

As I mentioned, my first attempt at the CEH exam didn’t go well. As I started the four-hour exam at the second attempt, I did a bit of QA testing of my own. I talked about the mechanics of the exam process in that blog so I won’t go into them here but having moved on to the second question, I went back to review the first, knowing that had caused an error last time round. Everything seemed to be working properly – perhaps I’d get through this thing?

You’re allowed nearly two minutes per question and I found that was plenty. To make sure I didn’t run out of time inadvertently, I’d mark questions for review where I wasn’t comfortable with the answer so I could return to them later. I spent about an hour and a half answering all the questions and an extra 15 minutes going over questions that needed extra attention.

I probably spent some extra time trying to interpret the questions and answers. It was clear that they’re not all from the same author – some aren’t from native English speakers:

Secure Sockets Layer (SSL) use the asymmetric encryption both (public/private key pair) to deliver the shared session key and to achieve a communication way

I have a lot of respect for those writing in a second language. The English language is fluid and we’re not computers so we can interpret meaning but I think clarity of questions would benefit everyone.

Infosec is a broad subject and the CEH syllabus is sufficiently broad to match. It goes into enough depth so that you have a good understanding of the topics but there’s always room to go deeper. Armed with this level of knowledge, it’s easier to find out more. I’m not likely to spend much time in buffer overflows but if I need to, I have a good start.

The Result

I did wonder how quickly I’d get results from the online exam. Would the answers have to go for some sort of verification? I was very thankful that the result came immediately – I’d passed.

Taking the exam twice was not what I had planned and I’m relieved that the process is now complete. I do believe that “every day’s a school day” and I’ve certainly learnt through training for the CEH certification. I won’t be standing up at speaking events with my certificate in hand but I will add it to the bio and hopefully people will feel it’s worth seeing me speak. See you at the next one?

Monday was an interesting day; I sat the Certified Ethical Hacker exam with the intention of receiving certification from EC Council. I'll leave my reasons for taking the exam for another post - hopefully one saying that I've passed…

It's been years since I last sat an exam. Back then, I would have been sat in an exam hall with pen and paper. But things have changed and exams can be taken online – remotely monitored with a proctor watching via webcam and screen-sharing. Great – I don’t even need to leave the house!

To take the exam, you start a webcam and chat session with a proctor at a company like ProctorU. They check your ID and you point your webcam around your workspace and around the room so they can see that you don’t have smartphones or any other way of cheating. It struck me that all those taking this “hacker” exam would be thinking about all the ways they could beat this system, if it weren’t for the “ethical” bit.

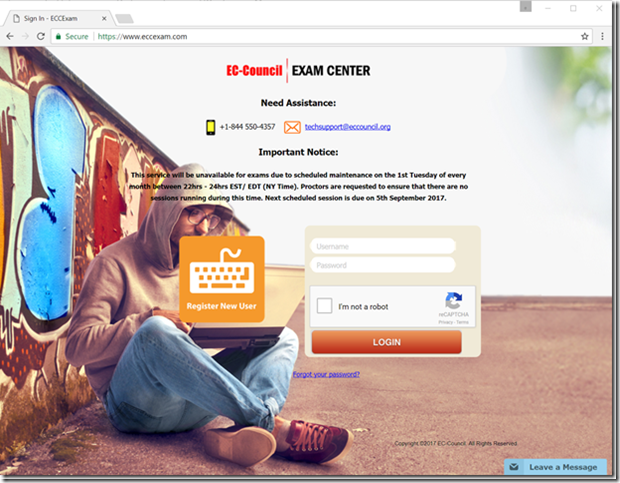

The proctor then takes you to the exam website where you log in, prove that you’ve paid with a one-time voucher code and select the exam that you’re taking. The proctor logs in and you accept various conditions (no break for four hours!) and you’re ready to start. I won’t dwell on the fact that the proctor is using credentials that are clearly common to everyone at the company – it’s not the first time I’ve seen that. As with all hackers, this one is wearing a hoodie but this is an ethical hacker so it’s beige.

I went through this process and began my exam. There are 125 multiple choice questions to answer – select a radio button and click the Answer button and the website progresses to the next question. I’m assuming that this is a PHP web app by the file extension in the URL. It’s straightforward stuff – the browser submits the form and the website returns the next page of question and answers. There’s also a checkbox to mark a question for review and a drop down list containing all the questions that have been answered with a Review button next to it.

I’m about 18 questions in and I think I’ve been a bit hasty on the previous question. I select it from the list and hit Review. That’s when I hit the first problem.

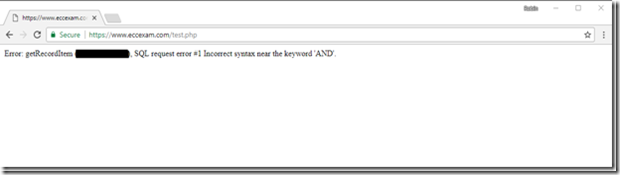

Ok, so I get a page with an error message with SQL syntax error. I’ve redacted what would seem to be a database table name in the error message – I don’t want to be the one sharing their database schema around the web.

Error: getRecordItem (<TABLE NAME HERE>), SQL request error #1 Incorrect syntax near the keyword 'AND'.

Improper Error Handling is covered in the OWASP Top 10 under Security Misconfiguration but I don’t have time to worry about that during my exam. I get in touch with the proctor to see if they can help. They try refreshing the page and opening new browser windows and going back through the exam selection process but it’s no good – the error message seems to be here to stay. They escalate to a colleague who tries the same things with no success. My exam voucher code is single use so I can’t even restart the exam.

At this point, the proctor says “We’ve sent an email to our contacts and they should contact you within three to six days” and I’m shocked. I can’t compare the stress levels now to those I had during university exams but the adrenaline is flowing. I’ve got a busy work calendar at the moment and rescheduling isn’t going to be easy. I’ve got a conference call following the exam. I go and get my mobile phone (having asked permission!) and call the EC Council helpline.

I get various ring tones as the call is bounced around the world. There’s a delay of a few seconds and communicating is a challenge. A few emails and phone calls and some time later and my one-time voucher code has been reset and I can restart the exam.

At this point, it’s an hour and a half after I first started and I restart the exam. I need to take more care as I answer the questions this time as I’m not going to risk trying to review questions later for fear of the same problem re-occurring.

I make good progress and I have time to spare as I reach the end of the questions. I select the last answer option and hit the Answer button on question 125.

Oh wow. My head is now in my hands as I start to think about what’s just happened. That error message again.

I contact the proctor. I’ve been handed over to a new person so I have to explain the situation again. Browser is refreshed. New tabs are opened. Error remains.

I fire off an email to EC Council technical support. The error message basically tells me that the answers are stored in a database so they should be able to retrieve them so I don’t have to re-sit the exam, right?

Apologies for the inconvenience caused.

We have reset your voucher code. Please reschedule the exam date for tomorrow.

We appreciate your patience and understanding of this matter.

I reply. Are they telling me that the answers aren’t stored in the database?

We apologize for the inconvenience caused, we regret to inform you that as the exam was not submitted successfully due to the technical difficulties we do not have logs from your exam to issue you with your certification.

We are happy to help you with the exam voucher of your choice valid for a period of 1 year and you can proceed to reschedule your exam with it.

Have a nice day!

I’ve replied again and I’m waiting for a response. Did the application really fail to store any of my answers to the database? Why would I repeat any exam that’s so likely to lose my data and waste my time? If ProctorU recorded my screen for the duration of the exam, can we rebuild the data?

For the answers to these questions and more, watch this space!

Microsoft, community and .NET Renaissance

I wasn’t there in the beginning. In 2003, whilst I was taking some time out to go snowboarding, a small group of pioneers were inciting the .NET revolution. Dissatisfied with the oppression of Microsoft, they wrought the iron of .NET into the sword of Alt in the fires of pain.

Anyway, I was in the Alps and the snow was great! So much so that I went back in 2004.

But the revolution was brewing.

Every day’s a school day

“You have to run just to keep up”

— My old boss, around 1997.

The pace of change in software development is significant and “every day’s a school day” has epitomised my software development career, where it can feel like you’re running just to keep up. I could have learnt different things in different ways but I’ve never stopped learning.

There have certainly been points at which my learning accelerated. One such moment is where new team members have joined the team and have brought new experience and insight. Another is joining the developer community.

Discovering community

Although I was learning, I was almost a Dark Matter Developer. I was getting stuff done but I was blissfully unaware of the vibrant community of developers that surrounded me. One of the team introduced me to the blog of Brighton (UK)-based developer Mike Hadlow. It was reading the comments on this blog post that I noticed a phrase that piqued my interest — “ALT.NET”.

What was this strange “ALT.NET UK” that they talked about? I Googled this magical thing and found that the Alt.NET UK conference was imminent — I registered immediately and I was in.

I met an amazing group of people at the Alt.NET UK unconference (including Mike Hadlow!). That led me to the London .NET user group, coding dojos, Progressive.NET, OWASP, DevOpsDays and the list goes on. I have many friends from that first Alt.NET event.

What’s in a name?

“There are only two hard things in Computer Science: cache invalidation and naming things, and off-by-one errors.”

— Phil Karlton et al

In Computer Science, naming things is hard. The same applies here. As Scott Hanselman said around the time of the first US conference, the Alt.NET name is polarizing. Some took it to mean anti-Microsoft. As with others, I took “alternative” to mean something that embraces choice.

Why shouldn’t we build software using any tools irrespective of where they come from? The key is that we build great software. The same is true of languages and frameworks. I love C# and .NET but if Python is more appropriate for a particular use case, use that. Why can’t we look at other the communities around other languages for inspiration too? We can, and that’s Alt.NET.

There was a feeling that Alt.NET was elitist and the Alt meant an alternative group of people. Perhaps. However, I wasn’t part of the elite and Alt.NET won me over. And the Alt.NET name still has a lot of power. It can be a rallying cry.

.NET Renaissance

I want a wider set of developers to discover a rich .NET ecosystem. Microsoft is treading a difficult path which seems to be guided by dragging large Enterprise customers using .NET Framework into the future that is .NET Core at the expense of new developers, who might struggle in adopting .NET Core. Will backwards compatibility and the complexity it brings mean that they choose PHP, Node.js, Go or something else instead?

Microsoft is a very different to the organisation that it was in the last decade. There are great people there doing great things but it is a large organisation and can do a lot of damage with little missteps. I want to see Microsoft continue to foster the ecosystem without stifling it. Much progress has been made here. Visual Studio wizards allow you to choose a unit testing framework other than MSTest; Newtonsoft Json.NET and jQuery were embraced, and not extinguished. These are limited examples but show the right intent. But even then, when Microsoft “bless” something, does that mean the others can’t compete?

.NET Core has amazing potential. It’s enabled a cross-platform world that was unthinkable at one time. It is even present in AWS Lambda ready for the next evolution of cloud computing. We have an opportunity to grasp all of that potential as a community and run with it.

Ian Cooper has detailed some of the reasons we need to take up the baton. Mark Rendle and Dylan Beattie have already written much more about the possibilities. As Gáspár Nagy says, it’s “high time to think positively”. I’m certainly not the only one looking forward to being a part of the .NET Renaissance!

There’s a new HTTP header on the block - HTTP Public Key Pinning (HPKP). It allows the server to publish a security policy in the same vein as HTTP Strict Transport Security and Content Security Policy.

The RFC was published last month so browser support is limited, supported in Chrome 38, Firefox 35 and newer. However, there are helpful articles from Scott Helme, Tim Taubert and Robert Love on the topic and OWASP has some general info on certificate and key pinning in general. Scott has even built support for HPKP reporting into his helpful reporting service - https://report-uri.io/.

Although Chrome and Firefox will honour your public key pins, testing the header is slightly tricky as they haven't implemented reporting yet (as of Chrome 42 and Firefox 38). I spent some time trying to coax both into reporting, working under the assumption that they must have implemented the whole spec right? It seems not.

In writing this, I also wanted to note the command I used to calculate the certifcate digest that's used in the header. In contrast to other examples, this connects to a remote host to get the certificate (including allowing for SNI), outputs to a file and exits openssl when complete.

echo |

openssl s_client -connect robinminto.com:443 -servername robinminto.com |

openssl x509 -pubkey -noout | openssl pkey -pubin -outform der |

openssl dgst -sha256 -binary | base64 > certdigest.txt

I won't be using HPKP in my day job until reporting support is available and I can validate that the configuration won't break clients. There's great potential here though once the support is available.

trebuildulation

[tree-bild-yuh-ley-shuh n]

noun

plural noun: trebuildulations

: grievous trouble or severe trial whilst rebuilding or repairing

: made up word

: nod to Star Trek

"the trebuildulations of a server"

What started with warning about disk space, turned into a complete server rebuild which occupied all of my free time this week. I’m writing down some of the issues for the benefit of my future self and others.

Old Windows

When I see a disk space warning, I head into the drive and look for things to delete. In this case, I immediately noticed Windows.old taking up 15GB – this remnant of the upgrade to Server 2012 R2 last year was ripe for removal.

If this were Windows 7, I would run Disk Cleanup but on Server that requires the Desktop Experience feature to be installed. It isn’t by default and can’t be removed once it is. So, I set about trying to remove the folder at the command line.

At this point, it seems I failed to remove the junctions from Windows.old but succeeded in taking ownership, resetting permissions and removing the folder. The folder was gone but all was not well. On restart, the Hyper-V management service wouldn’t start.

I forget the error but I eventually determined that the permissions had been reset on C:\ProgramData\Application Data. Unrestricted by folder permissions, Windows links this folder to itself resulting in C:\ProgramData\Application Data\Application Data\Application Data\Application Data\Application Data... This causes lots of MAX_PATH issues at the very least.

Despite correcting the permissions, I wasn’t quite able to fix everything and Hyper-V continued to fail. A rebuild was in order.

We couldn't create a new partition

Microsoft have refined the Windows Server installation process over the years so that it is now normally relatively painless, even without an unattended install. Boot from a USB key containing the installation media, select some localisation options, enter a licence key and off it goes. Not this time.

My RAID volume was visible to the installer and I could delete, create and format partitions but when I came to the next step in the process:

We couldn't create a new partition or locate an existing one. For more information, see the Setup log files.

This foxed me for a while. I tried DISKPART from the recovery prompt, I tried resetting disks to non-RAID and I tried disabling S.M.A.R.T. in the BIOS. Nothing worked. I did notice mention of removing USB devices and disconnecting other hard drives suggesting there’s some hidden limit on the number of drives or partitions that the installer can handle. I could have removed each of the three data drives one by one to see if that theory had merit but I decided to jump in and remove all three.

Success! A short while later I had a working Windows Server 2012 R2.

Detached Disks in the Storage Pool

I reconnected the three data drives and now came time to see if Storage Spaces would come back online as promised.

The steps were straightforward:

- Set Read-Write Access for the Storage Space

- Attach Virtual Disk for each of the virtual disks

- Online each of the disks

The disks were back online and the data was available. Great!

This was a short-lived success story – the disks were offline after reboot and had to be re-attached. Thankfully, I was not alone and a PowerShell one-liner fixed the issue.

Get-VirtualDisk | Set-VirtualDisk -IsManualAttach $False

Undesirable Desired State Configuration Hang

I thought I’d take the opportunity to switch my imperative PowerShell setup scripts for Desired State Configuration-based goodness. This was a pretty smooth process but there was one gotcha.

DSC takes care of silent MSI installations but EXE packages require the appropriate “/quiet” arguments. The result of missing arguments in my configuration script meant that DSC sat waiting for someone to click an invisible dialog box in the EXE’s installer.

Having fixed my script, I killed the PowerShell process and re-tried to be presented with this:

Cannot invoke the SendConfigurationApply method. The PerformRequiredConfigurationChecks method is in progress and must

return before SendConfigurationApply can be invoked.

The issue even survived a reboot.

Again, I was not alone and some more PowerShell later, my desired state is configured.

I’m pleased to report the server is back to a happy state and all is well in its world.

I’ve been automating the configuration of IIS on Windows servers using PowerShell. I’ll tell anyone who’ll listen that PowerShell is awesome and combined with source control, it’s my way of avoiding snowflake servers.

To hone the process, I’ll repeatedly build a server with all of it’s configuration from a clean image (strictly speaking, a Hyper-V checkpoint) and I’m occasionally getting an error in a configuration script that has previously worked:

new-itemproperty : Filename: \\?\C:\Windows\system32\inetsrv\config\applicationHost.config

Error: Cannot write configuration file

Why is this happening? The problem is intermittent so it’s difficult to say for sure but it does seem to occur more often if the IIS Management Console is open. My theory is that if the timing is right, Management Console has a lock on the config file when PowerShell attempts to write to it and this error occurs.

UPDATE

I'm now blaming AppFabric components for this issue. I noticed the workflow service was accessing the IIS config file and also found this article on the IIS forums - AppFabric Services preventing programmatic management of IIS. The workflow services weren't intentionally installed and we're using the AppFabric Cache client NuGet package so I've removed the AppFabric install completely and haven't had a recurrence of the problem.

I’ve been carrying an RFID-based Oyster card for years. It’s a fantastic way to use the transport network in London especially if you pay-as-you-go rather than using a seasonal travelcard. You just “touch in and out” and fares are deducted.

It’s no longer necessary to have to work out how many journeys you’re going to do to decide if you need a daily travelcard or not – the amount is automatically capped at the price of a daily travelcard, if you get to that point. “Auto top-up” means money is transferred from your bank to your card when you get below a certain threshold so you never have to visit a ticket office (especially given they’ve closed them all) or a machine. It’s amazing to think they’ve been around since 2003.

More recently it’s possible to pay for travel with a contactless payment card a.k.a. Visa PayWave or Mastercard Paypass. However, that leads to problems if, like most people, you carry more than one card in your wallet or purse – the Oyster system may not be able to read your Oyster card or worse, if your contactless payment card is compatible, it takes payment from the first card it can communicate with. If you have an Oyster seasonal travelcard, you don’t want to pay again for your journey by paying with credit card.

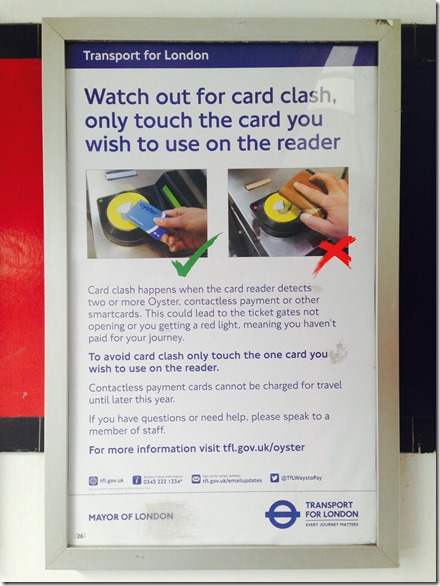

TFL have recently started promoting this phenomenon under the name “card clash”.

There’s even a pretty animation:

I first noticed card clash in 2010 when I was issued a new credit card with contactless payment. It went straight in my wallet with my Oyster and other payment cards and the first time I tried to touch in by presenting my wallet (as I’ve always done with Oyster) – beeeep beeeep beeeep (computer says no).

It didn’t take me long to work out that the Oyster reader couldn’t distinguish between two RFID-enabled cards. I soon had a solution in the form of Barclaycard OnePulse – a combined Oyster and Visa card. Perfect!

I could now touch in and out without having to get my card out of my wallet again. But not for long…

I don’t know about you but I like to carry more than one credit card so if one is declined or faulty, I can still buy stuff. I had a card from Egg Banking for that purpose. To cut a long story, slightly shorter, Egg were bought by Barclaycard who then replaced my card with a new contactless payment enabled card. Oh dear, card clash again.

I wrote to Barclaycard to ask if they could issue a card without contactless functionality. Here’s the response I got:

All core Barclaycards now come with contactless functionality; this is part of ensuring our cardholders are ready for the cashless payment revolution. A small number of our credit cards do not have contactless yet but it is our intention these will have the functionality in due course. That means we are unable to issue you a non-contactless card.

Please be assured that contactless transactions are safe and acceptance is growing. It uses the same functionality as Chip and PIN making it safe, giving you the same level of security and as it doesn't leave your hand there is even less chance of fraud.

I trust the information is of assistance.

Should you have any further queries, do not hesitate to contact me again.

I was very frustrated by this – I was already ready for the “cashless payment revolution” with my OnePulse card! Now, I was back in the situation where I would have to take the right card out of my wallet – not the end of the world but certainly not the promised convenience either. Barclaycard’s answer is to carry two wallets – helpful!

I’m certainly not the only one who has issues with card clash. I’m not the only one to notice TFL’s campaign to warn travellers of the impending doom as contactless payment cards become more common.

So what’s the solution? If your card company isn’t flexible about what type of cards they issue and you can’t change to one that is (I hear HSBC UK may still be issuing cards without contactless payment on request), another option is to disable the contactless payment functionality. Some people have taken to destroying the chip in the card by putting a nail through it, cutting it with a knife or zapping it. However, that also destroys the chip and PIN functionality which seems over the top. You can probably use the magnetic strip to pay with your card but presenting a card with holes in it to a shop assistant is likely to raise concerns.

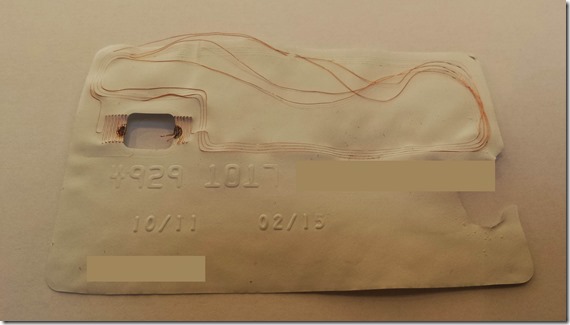

RFID cards have an aerial which allows communication with the reader and supplies power to the chip via electromagnetic induction (the zapping technique above overloads the chip with a burst of energy by induction). This means we can disable RFID without disabling chip and PIN by breaking the aerial circuit, meaning no communication or induction.

The aerial layout varies. I dissected an old credit card to get an idea where the aerial was in my card (soaking in acetone causes the layers of the card to separate). Here you can see that the aerial runs across the top and centre of the card.

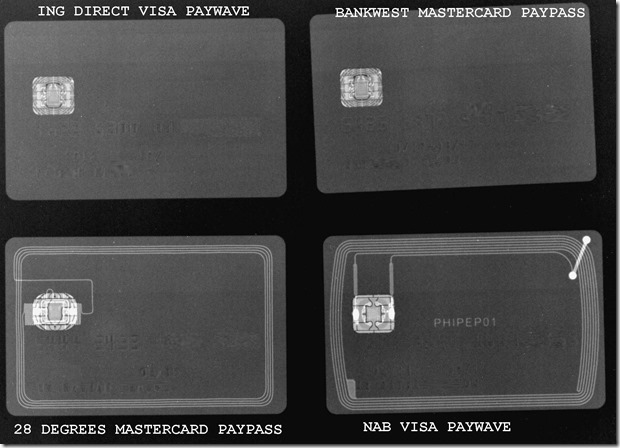

There are some helpful x-rays on the interwebs now showing some alternate layouts.

The aerial can be broken with a hole punch or soldering iron but I’ve found that making a small cut across the aerial is sufficient. It’s not necessary to cut all the way through the card – all that’s needed is to cut through to the layer containing the aerial. Start with a shallow cut and make it deeper if required. From the images above, a cut to the top of the card is likely to disable most cards.

The central cut on this card wasn’t effective. The cut at the top was.

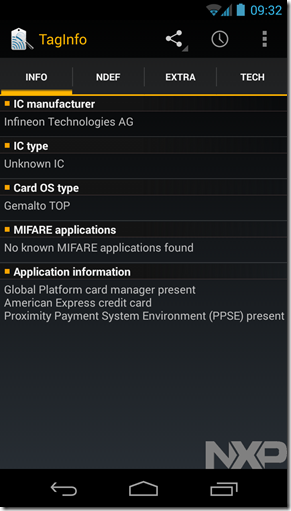

If you have an NFC-enabled smartphone, it’s possible to test the card to check that contactless is disabled. I’m using NFC TagInfo on a Samsung Galaxy Nexus. Here’s the card above before:

and after the aerial is cut (the chip no longer registers):

Job done! The card continues to work with chip and PIN but doesn’t interfere with Oyster.

Barclaycard have thrown another spanner in the works for me – OnePulse is being withdrawn. The solution seems to be to switch to contactless payments instead of Oyster. Here’s hoping that TFL can finish implementing contactless payments (promised “later in 2014”) before my OnePulse is withdrawn.

Disclaimer: if you’re using sharp knives, have a responsible adult present. If you damage yourself or your cards, don’t blame me!

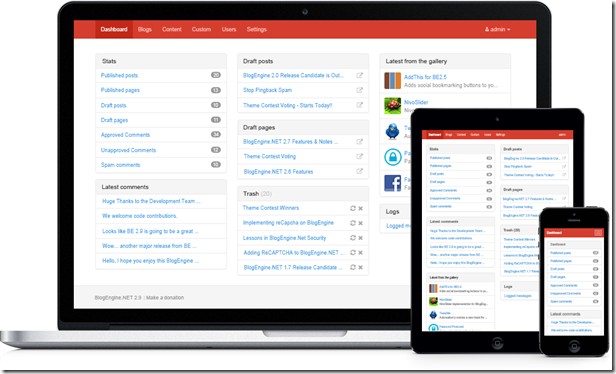

Part 2. A new responsive design.

Mobile is the new black. Mobile phones are increasingly used to access the web and websites need to take that into account.

I’m using BlogEngine.NET which can deliver pages using a different template to browsers with small screens but that’s not ideal for larger phones and tablets. However, there is a better way – responsive web design. With a responsive design, CSS media queries and flexible images provide a flexible layout that is adjusted based on the screen (or browser) size.

The BlogEngine.NET team have recognised that responsive design is the way forward so the recently released version uses the Bootstrap framework for the default template and administration UI. They’ve done a great job.

As part of moving my blog to Windows Azure as described in part 1, I felt a new look was in order but I didn’t want to use the new BlogEngine.NET template so set about designing my own.

Bootstrap isn’t the only framework that can help you build a responsive design. There’s Foundation, HTML5 Boilerplate, HTML KickStart and Frameless to name a few. I wanted a framework without too much in-built styling. Although Bootstrap can be customised, there are a lot of choices if you take that route.

I chose Skeleton as the framework for my design. It’s a straightforward grid system with minimal styling. And I’ve retained the minimal styling, only introducing a few design elements. My design is inspired by the Microsoft design language (formerly Metro) – that basically comes down to flat, coloured boxes. Can you tell I’m not a designer?

An added complexity is the introduction of high pixel density displays such as Apple’s Retina. Images that look fine on a standard display, look poor on Retina and the like. Creating images with twice as many pixels is one solution but I chose the alternative route of Scalable Vector Graphics. modernuiicons.com provides an amazing range of icons (1,207 at time of writing), intended for Windows Phone but perfect for my design. They appear top-right and at the bottom of the blog.

Another website that came in very handy was iconifier.net – icon generator for Apple and favicon icons.

So, I’m now running on Windows Azure with a new responsive design. Perhaps I’ll get round to writing some blog articles that justify the effort?

Part 1. Getting up and running on Windows Azure.

Microsoft’s cloud computing platform has a number of interesting features but the ability to create, deploy and scale web sites is particularly interesting to .NET developers. Many ISPs offer .NET hosting but few make it as easy as Azure.

The web server setup and maintenance is all taken care of and managed by Microsoft, which I already get with my current host Arvixe. However, apps can be deployed directly from GitHub and Bitbucket (and more) and it’s possible to scale to 40 CPU cores with 70 GB of memory with a few clicks. If you don’t need that level of performance, you might even be able to run on the free tier.

Here’s how I got up and running (I’ve already gone through the process of setting up an Azure account).

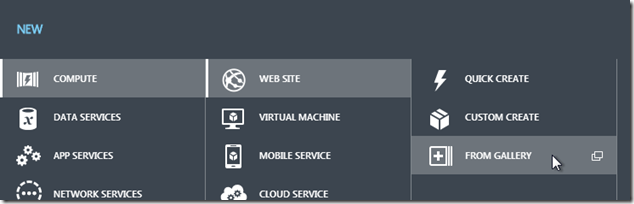

Create a new website from the gallery:

In the gallery, select BlogEngine.NET. Choose a URL (something.azurewebsites.net) and a region (I choose North or West Europe as those are closest to my location).

Wait. 90 seconds later, a default BlogEngine.NET website is up and running.

I’ve created a source control repository in Mercurial. This contains:

- extensions (in App_Code, Scripts and Styles)

- settings, categories, posts, pages (in App_Data)

- the new design for the blog (in Themes) – see part 2

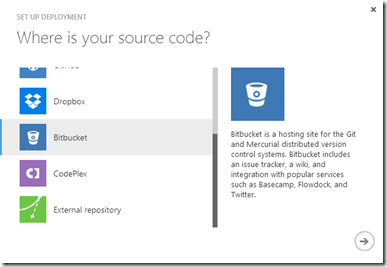

Next, I need to configure my web site to deploy from Bitbucket where I’ve pushed that repo. “Set up deployment from source control” is an option in the dashboard:

Select Bitbucket and you’ll be asked to log in and allow Azure to connect to your repos:

Azure will then deploy that repo to the website from the latest revision.

All that’s left is to log into blog and make a couple of changes. Firstly, I delete the default blog article created by BlogEngine.NET. I’m displaying tweets on my blog using the “Recent Tweets” widget so I install that.

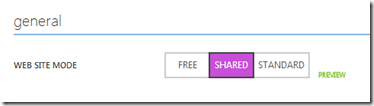

I’m using a custom domain rather than something.azurewebsites.net. That means I have to scale up from the free tier to shared:

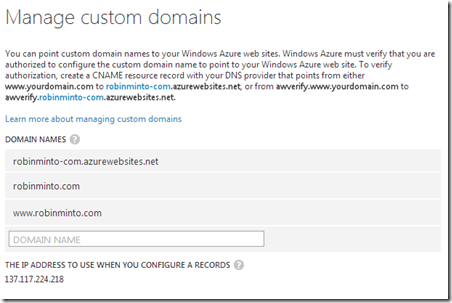

Windows Azure must verify that I am authorized to use my custom domain. I do this by making a DNS change (my DNS records are hosted with DNSimple), adding a CNAME records pointing from awverify.www.robinminto.com to awverify.robinminto-com.azurewebsites.net and from awverify.robinminto.com to awverify.robinminto-com.azurewebsites.net. If I wasn’t using the domain at the time, I could have added the CNAME records without the awverify prefix. Verification step complete, I can then add the domain names to Azure.

Finally, having checked everything is working properly I change the DNS entries (as above without the awverify prefix) to point to my new web site and it’s job done!

I took the opportunity to update the design that I use. I’ll deal with that in part 2.

I’m lamenting the demise of Forefront TMG but I still think it’s a great product and continue to use it. Of course, I’m currently planning what I’m going to replace it with but I expect it’ll have to be a combination of products to get the features that I need.

Anyway, I’m currently doing some log analysis and wanted to capture some useful information about the process. TMG is using SQL Server Express for logging and I want to do some analysis in SQL Server proper.

First, export the logs from TMG. I won’t repeat the steps from that post but in summary:

- Run the Import and Export Data application (All Programs / Microsoft SQL Server 2008 / Import and Export Data). Run this elevated/as administrator.

- Choose the database (not today’s databases or you’ll cause contention).

- Select Flat File Destination and include Column names in the first data row.

- Select Tab {t} as the Column delimiter.

- Export.

I took the files from this export and wanted to import them into SQL Server. Rather than import everything into varchar fields, I wanted to reproduce the schema that TMG uses. I couldn’t find the schema on the interwebs so I grabbed the databases from an offline TMG and scripted the tables.

Here’s the script to create the FirewallLog table:

CREATE TABLE [dbo].[FirewallLog](

[servername] [nvarchar](128) NULL,

[logTime] [datetime] NULL,

[protocol] [varchar](32) NULL,

[SourceIP] [uniqueidentifier] NULL,

[SourcePort] [int] NULL,

[DestinationIP] [uniqueidentifier] NULL,

[DestinationPort] [int] NULL,

[OriginalClientIP] [uniqueidentifier] NULL,

[SourceNetwork] [nvarchar](128) NULL,

[DestinationNetwork] [nvarchar](128) NULL,

[Action] [smallint] NULL,

[resultcode] [int] NULL,

[rule] [nvarchar](128) NULL,

[ApplicationProtocol] [nvarchar](128) NULL,

[Bidirectional] [smallint] NULL,

[bytessent] [bigint] NULL,

[bytessentDelta] [bigint] NULL,

[bytesrecvd] [bigint] NULL,

[bytesrecvdDelta] [bigint] NULL,

[connectiontime] [int] NULL,

[connectiontimeDelta] [int] NULL,

[DestinationName] [varchar](255) NULL,

[ClientUserName] [varchar](514) NULL,

[ClientAgent] [varchar](255) NULL,

[sessionid] [int] NULL,

[connectionid] [int] NULL,

[Interface] [varchar](25) NULL,

[IPHeader] [varchar](255) NULL,

[Payload] [varchar](255) NULL,

[GmtLogTime] [datetime] NULL,

[ipsScanResult] [smallint] NULL,

[ipsSignature] [nvarchar](128) NULL,

[NATAddress] [uniqueidentifier] NULL,

[FwcClientFqdn] [varchar](255) NULL,

[FwcAppPath] [varchar](260) NULL,

[FwcAppSHA1Hash] [varchar](41) NULL,

[FwcAppTrusState] [smallint] NULL,

[FwcAppInternalName] [varchar](64) NULL,

[FwcAppProductName] [varchar](64) NULL,

[FwcAppProductVersion] [varchar](20) NULL,

[FwcAppFileVersion] [varchar](20) NULL,

[FwcAppOrgFileName] [varchar](64) NULL,

[InternalServiceInfo] [int] NULL,

[ipsApplicationProtocol] [nvarchar](128) NULL,

[FwcVersion] [varchar](32) NULL

) ON [PRIMARY]

and here's the script to create the WebProxyLog table:

CREATE TABLE [dbo].[WebProxyLog](

[ClientIP] [uniqueidentifier] NULL,

[ClientUserName] [nvarchar](514) NULL,

[ClientAgent] [varchar](128) NULL,

[ClientAuthenticate] [smallint] NULL,

[logTime] [datetime] NULL,

[service] [smallint] NULL,

[servername] [nvarchar](32) NULL,

[referredserver] [varchar](255) NULL,

[DestHost] [varchar](255) NULL,

[DestHostIP] [uniqueidentifier] NULL,

[DestHostPort] [int] NULL,

[processingtime] [int] NULL,

[bytesrecvd] [bigint] NULL,

[bytessent] [bigint] NULL,

[protocol] [varchar](13) NULL,

[transport] [varchar](8) NULL,

[operation] [varchar](24) NULL,

[uri] [varchar](2048) NULL,

[mimetype] [varchar](32) NULL,

[objectsource] [smallint] NULL,

[resultcode] [int] NULL,

[CacheInfo] [int] NULL,

[rule] [nvarchar](128) NULL,

[FilterInfo] [nvarchar](256) NULL,

[SrcNetwork] [nvarchar](128) NULL,

[DstNetwork] [nvarchar](128) NULL,

[ErrorInfo] [int] NULL,

[Action] [varchar](32) NULL,

[GmtLogTime] [datetime] NULL,

[AuthenticationServer] [varchar](255) NULL,

[ipsScanResult] [smallint] NULL,

[ipsSignature] [nvarchar](128) NULL,

[ThreatName] [varchar](255) NULL,

[MalwareInspectionAction] [smallint] NULL,

[MalwareInspectionResult] [smallint] NULL,

[UrlCategory] [int] NULL,

[MalwareInspectionContentDeliveryMethod] [smallint] NULL,

[UagArrayId] [varchar](20) NULL,

[UagVersion] [int] NULL,

[UagModuleId] [varchar](20) NULL,

[UagId] [int] NULL,

[UagSeverity] [varchar](20) NULL,

[UagType] [varchar](20) NULL,

[UagEventName] [varchar](60) NULL,

[UagSessionId] [varchar](50) NULL,

[UagTrunkName] [varchar](128) NULL,

[UagServiceName] [varchar](20) NULL,

[UagErrorCode] [int] NULL,

[MalwareInspectionDuration] [int] NULL,

[MalwareInspectionThreatLevel] [smallint] NULL,

[InternalServiceInfo] [int] NULL,

[ipsApplicationProtocol] [nvarchar](128) NULL,

[NATAddress] [uniqueidentifier] NULL,

[UrlCategorizationReason] [smallint] NULL,

[SessionType] [smallint] NULL,

[UrlDestHost] [varchar](255) NULL,

[SrcPort] [int] NULL,

[SoftBlockAction] [nvarchar](128) NULL

) ON [PRIMARY]

TMG stores IPv4 and IPv6 addresses in the same field using as a UNIQUEIDENTIFIER. This means we have to parse them if we want to display a dotted quad, or at least we find a function that will do it for us.

Here’s my version:

CREATE FUNCTION [dbo].[ufn_GetIPv4Address]

(

@uidIP UNIQUEIDENTIFIER

)

RETURNS NVARCHAR(20)

AS

BEGIN

DECLARE @binIP VARBINARY(4)

DECLARE @h1 VARBINARY(1)

DECLARE @h2 VARBINARY(1)

DECLARE @h3 VARBINARY(1)

DECLARE @h4 VARBINARY(1)

DECLARE @strIP NVARCHAR(20)

SELECT @binIP = CONVERT(VARBINARY(4), @uidIP)

SELECT @h1 = SUBSTRING(@binIP, 1, 1)

SELECT @h2 = SUBSTRING(@binIP, 2, 1)

SELECT @h3 = SUBSTRING(@binIP, 3, 1)

SELECT @h4 = SUBSTRING(@binIP, 4, 1)

SELECT @strIP = CONVERT(NVARCHAR(3), CONVERT(INT, @h4)) + '.'

+ CONVERT(NVARCHAR(3), CONVERT(INT, @h3)) + '.'

+ CONVERT(NVARCHAR(3), CONVERT(INT, @h2)) + '.'

+ CONVERT(NVARCHAR(3), CONVERT(INT, @h1))

RETURN @strIP

END

Action values are stored as an integer so I’ve created a table to decode them:

CREATE TABLE [dbo].[Action](

[Id] [int] NOT NULL,

[Value] [varchar](50) NOT NULL,

[String] [varchar](50) NOT NULL,

[Description] [varchar](255) NOT NULL,

CONSTRAINT [PK_Action] PRIMARY KEY CLUSTERED ([Id] ASC)

)

INSERT INTO Action

( Id, Value, String, Description )

VALUES

(0, 'NotLogged', '-', 'No action was logged.'),

(1, 'Bind', 'Bind', 'The Firewall service associated a local address with a socket.'),

(2, 'Listen', 'Listen', 'The Firewall service placed a socket in a state in which it listens for an incoming connection.'),

(3, 'GHBN', 'Gethostbyname', 'Get host by name request. The Firewall service retrieved host information corresponding to a host name.'),

(4, 'GHBA', 'gethostbyaddr', 'Get host by address request. The Firewall service retrieved host information corresponding to a network address.'),

(5, 'Redirect_Bind', 'Redirect Bind', 'The Firewall service enabled a connection using a local address associated with a socket.'),

(6, 'Establish', 'Initiated Connection', 'The Firewall service established a session.'),

(7, 'Terminate', 'Closed Connection', 'The Firewall service terminated a session.'),

(8, 'Denied', 'Denied Connection', 'The action requested was denied.'),

(9, 'Allowed', 'Allowed Connection', 'The action requested was allowed.'),

(10, 'Failed', 'Failed Connection Attempt', 'The action requested failed.'),

(11, 'Intermediate', 'Connection Status', 'The action was intermediate.'),

(12, 'Successful_Connection', '- Initiated VPN Connection', 'The Firewall service was successful in establishing a connection to a socket.'),

(13, 'Unsuccessful_Connection', 'Failed VPN Connection Attempt', 'The Firewall service was unsuccessful in establishing a connection to a socket.'),

(14, 'Disconnection', 'Closed VPN Connection', 'The Firewall service closed a connection on a socket.'),

(15, 'User_Cleared_Quarantine', 'User Cleared Quarantine', 'The Firewall service cleared a quarantined virtual private network (VPN) client.'),

(16, 'Quarantine_Timeout', 'Quarantine Timeout', 'The Firewall service disqualified a quarantined VPN client after the time-out period elapsed.')

The next step is to import the exported text files into the relevant table in SQL Server. Note that the SQL Server Import and Export Wizard has a default length of 50 characters for all character fields – that won’t be sufficient for much of the data. I used the schema as a reference to choose the correct lengths.

I can now look at the log data in ways that the log filtering can’t and without stressing the TMG servers.

-- Outbound traffic ordered by connections descending

SELECT ApplicationProtocol, A.String AS Action, COUNT(*)

FROM FirewallLog FL

INNER JOIN Action A ON A.Id = FL.Action

WHERE DestinationNetwork = 'External' AND FL.Action NOT IN (7)

GROUP BY ApplicationProtocol, A.String

ORDER BY COUNT(*) DESC

-- Successful outbound traffic ordered by connections descending

SELECT ApplicationProtocol, A.String AS Action, COUNT(*)

FROM FirewallLog FL

INNER JOIN Action A ON A.Id = FL.Action

WHERE DestinationNetwork = 'External' AND A.String IN ('Initiated Connection')

GROUP BY ApplicationProtocol, A.String

ORDER BY COUNT(*) DESC

-- Successful outbound traffic showing source and destination IP addresses, ordered by connections descending

SELECT ApplicationProtocol ,

dbo.ufn_GetIPv4Address(SourceIP) AS SourceIP ,

dbo.ufn_GetIPv4Address(DestinationIP) AS DestinationIP ,

COUNT(*) AS Count

FROM FirewallLog

WHERE ApplicationProtocol IN ( 'HTTP', 'HTTPS', 'FTP' )

AND DestinationNetwork = 'External'

AND Action NOT IN ( 7, 8, 10, 11 )

GROUP BY ApplicationProtocol ,

dbo.ufn_GetIPv4Address(SourceIP) ,

dbo.ufn_GetIPv4Address(DestinationIP)

ORDER BY COUNT(*) DESC