We’ve recently uncovered an issue with the way that I had configured web farm publishing in Microsoft Threat Management Gateway (TMG). When I say “we”, I include Microsoft Support who really got to the bottom of the problem. Of course, they’re in a privileged position as they have access to the source code of the product.

Perhaps I would have resolved it eventually. I’m thankful for MS support though. I didn’t find anything on the web to help me with this problem so, on the off chance it can help someone else, I thought I’d write it up.

The Symptoms

We’ve been switching our web publishing from Windows NLB to TMG web farms for balancing load to our IIS servers and we began seeing an intermittent issue. One minute we were successfully serving pages but the next minute, clients would receive an HTTP 500 error “The remote server has been paused or is in the process of being started” and a Failed Connection Attempt with HTTP Status Code 70 would appear in the TMG logs.

The issue would last for 30 to 60 seconds and then publishing would resume successfully. This would normally indicate that TMG has detected, using connectivity verifiers for the farm, that no servers are available to respond to requests. However, the servers appeared to be fine from the perspective of our monitoring system (behind the firewall) and for clients connecting in a different way (either over a VPN or via a TMG single-server publishing rule).

The (Wrong) Setup

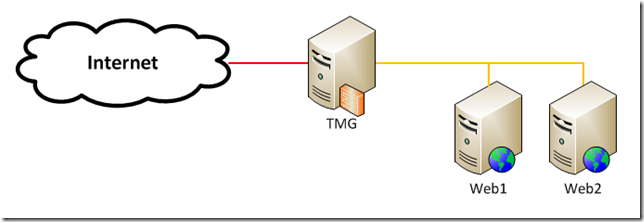

Let’s say we have a pair of web servers, Web1 and Web2, protected from the Internet by TMG.

Each web server has a number of web sites in IIS, each bound to port 80 and a different host header. All of the host headers for a single web server map to the same internal IP address like this:

| Host name |

IP address |

| prod.admin.web1 |

172.16.0.1 |

| prod.cms.web1 |

172.16.0.1 |

| prod.static.web1 |

172.16.0.1 |

| prod.admin.web2 |

172.16.0.2 |

| prod.cms.web1 |

172.16.0.2 |

| prod.static.web1 |

172.16.0.2 |

In reality, you should fully qualify the host name (e.g. prod.admin.web1.examplecorp.local) but I haven’t for this example.

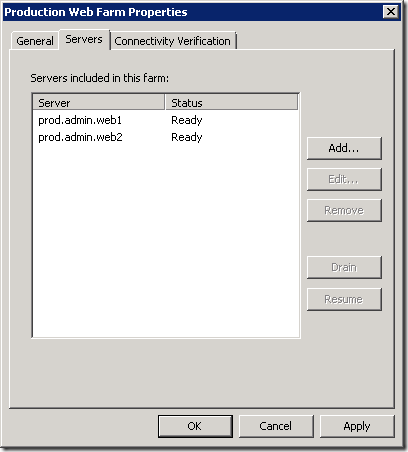

I’ll assume that you know how to publish a web farm using TMG. We have a server farm configured for each web site with each web server configured like this (N.B. this is wrong as we’ll see later):

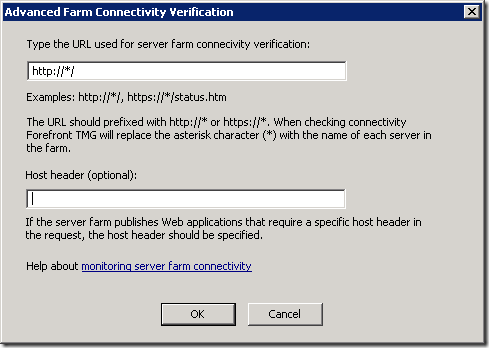

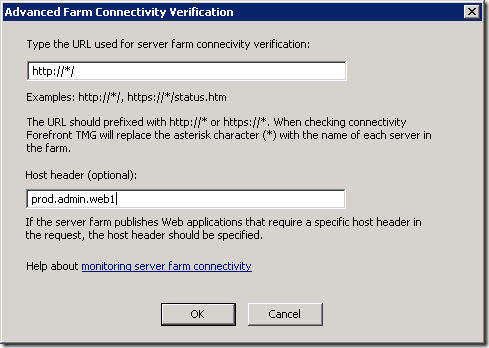

The benefit of this approach is that because we’ve specified the host header (prod.admin.web1) rather than just the server name (web1), we don’t have to specify the host header in the connectivity verifier:

This setup appears to work but under load, and as additional web sites and farm objects are added, our symptoms start to appear.

The Problem

So what was happening? TMG maintains open connections to the web servers which are part of the reverse-proxied requests from clients on the Internet. Despite the fact that all of host headers in the farm objects resolve to the same IP address, TMG compares them based on the host name and therefore they appear to be different. This means that TMG is opening and closing connections more often than it should.

The Solution

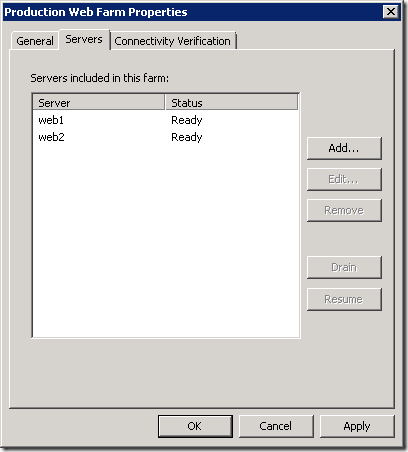

The solution is to specify the servers in the server farm object using the server host name and not the host header name. You have to do this for all farm objects that are using the same servers.

You then have to specify the host header in the connectivity verifier:

You could also use the IP address of the server. This is the configuration that Jason Jones recommends but I prefer the clarity of host name over IP address. I’m trusting that DNS will work as it should and won’t add much overhead. If you need support with TMG, Jason is excellent by the way.

Conclusion

Specifying the servers by host header name seemed logical to me. It was explicit and didn’t require that element of configuration to be hidden away in the connectivity verifier.

I switched from host header to IP address as part of testing but it didn’t fix our problem. It didn’t fix the problem because I only used IP addresses for a single farm object and not all of them.

Although TMG could identify open server connections based on IP address, it doesn’t. It uses host name. This has to be taken into account when configuring farm objects. In summary, if you’re using multiple server farm objects for the same servers, make sure you specify the server name consistently. Use IP address or an identical host name.

I was lucky enough to spend a week in the Seychelles this month. If your geography is anything like mine you won’t know that the Seychelles is “an island country spanning an archipelago of 115 islands in the Indian Ocean, some 1,500 kilometres (932 mi) east of mainland Africa, northeast of the island of Madagascar” – thank you Wikipedia.

In our connected world, the Internet even reaches into the Indian Ocean so I wasn’t deprived of email, BBC News, Facebook etc. but I did begin to wonder how such a small and remote island nation is wired up. It turns out that I was online via Cable and Wireless and a local ISP that they own called Atlas.

Being a geek I thought I’d run some tests, starting with SpeedTest. The result was download and upload speeds around 0.6Mbps and a latency of around 700ms. In the UK, the average broadband speed at the end of 2010 was 6.2Mbps and I would expect latency to be less than 50ms. Of course, I went to the Seychelles for the sunshine and not the Internet but I was interested in how things were working.

So, I ran a “trace route” to see how my packets would traverse the globe back to the BBC in Blighty. I thought the Beeb was an appropriate destination.

Then I added some geographic information using MaxMind's GeoIP service and ip2location.com.

The result was this:

| Hop | IP Address | Host Name | Region Name | Country Name | ISP | Latitude | Longitude |

|---|

| 1 |

10.10.10.1 |

|

|

|

|

|

|

| 2 |

41.194.0.82 |

|

Pretoria |

South Africa |

Intelsat GlobalConnex Solutions |

-25.7069 |

28.2294 |

| 3 |

41.223.219.21 |

|

|

Seychelles |

Atlas Seychelles |

-4.5833 |

55.6667 |

| 4 |

41.223.219.13 |

|

|

Seychelles |

Atlas Seychelles |

-4.5833 |

55.6667 |

| 5 |

41.223.219.5 |

|

|

Seychelles |

Atlas Seychelles |

-4.5833 |

55.6667 |

| 6 |

203.99.139.250 |

|

|

Malaysia |

Measat Satellite Systems Sdn Bhd, Cyberjaya, Malay |

2.5 |

112.5 |

| 7 |

121.123.132.1 |

|

Selangor |

Malaysia |

Maxis Communications Bhd |

3.35 |

101.25 |

| 8 |

4.71.134.25 |

so-4-0-0.edge2.losangeles1.level3.net |

|

United States |

Level 3 Communications |

38.9048 |

-77.0354 |

| 9 |

4.69.144.62 |

vlan60.csw1.losangeles1.level3.net |

|

United States |

Level 3 Communications |

38.9048 |

-77.0354 |

| 10 |

4.69.137.37 |

ae-73-73.ebr3.losangeles1.level3.net |

|

United States |

Level 3 Communications |

38.9048 |

-77.0354 |

| 11 |

4.69.132.9 |

ae-3-3.ebr1.sanjose1.level3.net |

|

United States |

Level 3 Communications |

38.9048 |

-77.0354 |

| 12 |

4.69.135.186 |

ae-2-2.ebr2.newyork1.level3.net |

|

United States |

Level 3 Communications |

38.9048 |

-77.0354 |

| 13 |

4.69.148.34 |

ae-62-62.csw1.newyork1.level3.net |

|

United States |

Level 3 Communications |

38.9048 |

-77.0354 |

| 14 |

4.69.134.65 |

ae-61-61.ebr1.newyork1.level3.net |

|

United States |

Level 3 Communications |

38.9048 |

-77.0354 |

| 15 |

4.69.137.73 |

ae-43-43.ebr2.london1.level3.net |

|

United States |

Level 3 Communications |

38.9048 |

-77.0354 |

| 16 |

4.69.153.138 |

ae-58-223.csw2.london1.level3.net |

|

United States |

Level 3 Communications |

38.9048 |

-77.0354 |

| 17 |

4.69.139.100 |

ae-24-52.car3.london1.level3.net |

|

United States |

Level 3 Communications |

38.9048 |

-77.0354 |

| 18 |

195.50.90.190 |

|

London |

United Kingdom |

Level 3 Communications |

51.5002 |

-0.1262 |

| 19 |

212.58.238.169 |

|

Tadworth, Surrey |

United Kingdom |

BBC |

51.2833 |

-0.2333 |

| 20 |

212.58.239.58 |

|

London |

United Kingdom |

BBC |

51.5002 |

-0.1262 |

| 21 |

212.58.251.44 |

|

Tadworth, Surrey |

United Kingdom |

BBC |

51.2833 |

-0.2333 |

| 22 |

212.58.244.69 |

www.bbc.co.uk |

Tadworth, Surrey |

United Kingdom |

BBC |

51.2833 |

-0.2333 |

What does that tell me? Well, I ignored the first hop on the local network and the second looks wrong as we jump to South Africa and back again. The first stop on our journey is Malaysia, 3958 miles away.

We then travel to the west coast of America, Los Angeles. Another 8288 miles.

We wander around California. The latitude/longitude information isn’t that accurate so I’m basing this on the host name but we hop around LA and then to San Jose followed by New York. Only 2462 miles.

We rattle around New York and then around London, 3470 miles across the pond.

Our final destination is Tadworth in Surrey, just outside of London.

That’s just over eighteen thousand miles (and back) in less than a second – not bad, I say.

p.s. don’t worry, I spent most of the time by the pool and not in front of a computer.